schneiderbox

Kelvandor on Docker

November 14, 2021

Text Mode

Historically, I’ve considered the game-playings AIs that I write a bit of a pain to share with others.

The AI engine itself is typically a native command-line program that implements something akin to the Universal Chess Interface. The program gets passed a serialized game state, and it returns the move it thinks strongest.

For example, a serialized game state for Kelvandor (which plays the game Node) looks like this:

r2g1b2r3g2y2v0g3y1b3r1b1y3000020000100001200000000000000002001120001000022000100000000201010000020002040

…to which the engine might respond:

b05

b09

trrgy

e

I’m a lover of the command line, but even with the additional information and aesthetically-dubious ASCII boards included in the debug information, I’ll admit a real user interface is needed to play a serious game.

A Real User Interface

Luckily, my friend and fellow AI testing team member Glen Tankserlsey will frequently write such a UI for the games we work on. He often gets a servicable prototype up and running early in the project, just in time for me to begin testing basic versions of my engine. The problem is, these UIs are normally entirely client-side web apps—Glen likes writing his own AIs in JavaScript.

Interfacing JavaScript running in a browser with a native executable requires additional work. Typically, I’ll use something like Flask to create a tiny web app that accepts web requests, calls the executable, and returns the move (and whatever other useful information there might be). Glen then adds an option to his UI to use an external API for AI moves.

So to play against one of my AIs, you need:

- The engine itself, compiled for your architecture

- A user interface, accesed via a proper web server (since browsers often put breaking

restrictions around

file://URLs) - A web app providing an API for the engine executable, and the proper environment set up to run the app

This isn’t a whole lot, but it’s not trivial either. It’s no problem for the AI testing team, since I’ll maintain a private deployment for us to use. However, it feels like a little much to ask of someone with a casual interest in playing a game against one of the AIs.

I could run a public deployment for each of my AIs, but I’m a little leery of taking on the administrative and security considerations that come with running Internet-exposed and compute-heavy C programs. It could definitely be done, and I might do it some time in the future, but don’t have anything set up right now.

Can’t See the Docker for the Containers

It took me way too long to realize this is the exact sort of situation Docker helps with. My veil of ignorance lifted, I decided to start with Kelvandor, the newest AI.

I wrote a

Dockerfile that builds a container

for the engine and API, and a

Compose file that combines

the engine/API container with an Nginx container that serves the UI. Together, this means you can

bring up a functional environment with a single docker compose up command. Browse to

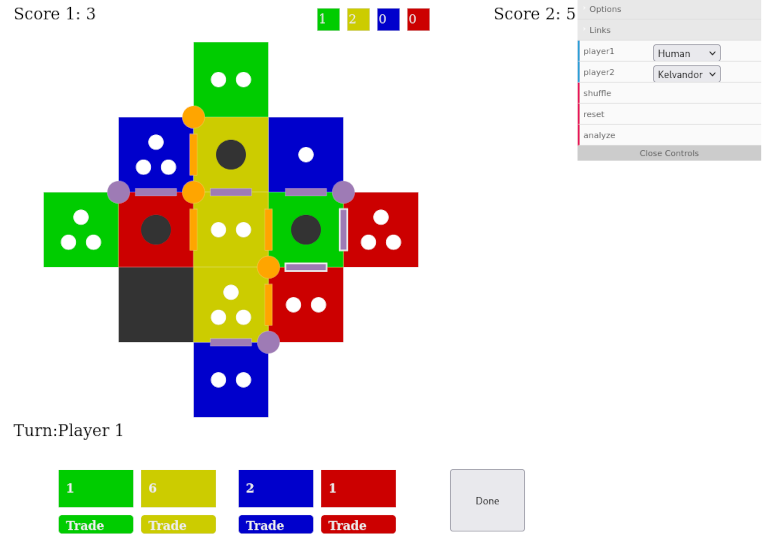

localhost:8000 and start playing!

The Dockerfile is pretty simple:

FROM alpine

RUN apk update && \

apk add g++ make python3 py3-pip && \

pip install flask gunicorn

WORKDIR /opt/kelvandor

COPY ["src/", "."]

RUN ["make"]

ENTRYPOINT ["make", "httpapi"]

It starts with the

recommended Alpine

Linux container, installs the dependencies, copies Kelvandor’s src/ directory to the container,

and builds the kelvandor executable with make. When started, the container runs make httpapi,

which is the Makefile target for running the API

app with Gunicorn.

The Compose file isn’t much worse:

services:

api:

build: .

ports:

- "5000:5000"

ui:

image: nginx

volumes:

- ./html:/usr/share/nginx/html:ro

ports:

- "8000:80"

depends_on:

- api

It defines a list of two containers, api and ui. api is built using the above Dockerfile. Port

5000 on the container is bound to port 5000 on the host, so the user’s browsers can access the API

when using the UI (localhost:5000 is what Gunicorn binds to in make httpapi and is the default

URL for the API in the UI).

The ui container uses the official Nginx

image, and mounts Kelvandor’s html/ directory at the webroot on

the container. Since we’re mounting and not copying, any changes to the UI in html/ will be

immediately present in the container.

Nginx’s port 80 is bound to port 8000 on the host, so the user can access the UI at localhost:8000.

Finally, the ui container

depends_on the api

container. This is optional here—everything would work without it—but it reflects

the fact that the AI functionality in the UI won’t work without the API being available. The

depends_on option does a few useful things, namely making sure that api is started before ui

when running docker compose up.

Remember Next Time

There are still improvements to be made, both to the Docker configuration and the Kelvandor project as a whole. However, these AI projects have always been about iterative improvement, bringing technical advancements and lessons learned on the previous project to the next one. Docker is a useful tool, and I plan to remember it more in the future!